Accuracy measures the percentage of correctly predicted labels in the test set. While it is a useful metric, it may not always be the best indicator of a model's performance, especially when the classes are imbalanced.

Precision measures the percentage of true positives among all positive predictions. It is a useful metric when the goal is to minimize false positives.

Recall measures the percentage of true positives among all actual positives. It is a useful metric when the goal is to minimize false negatives.

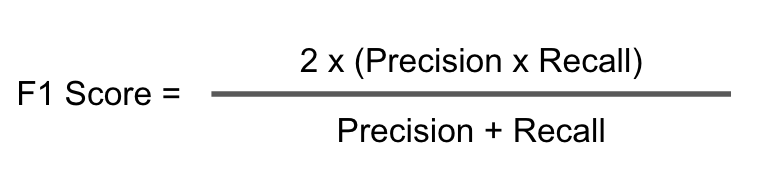

F1 Score is the harmonic mean of precision and recall. It is a useful metric when you want to balance the importance of precision and recall.

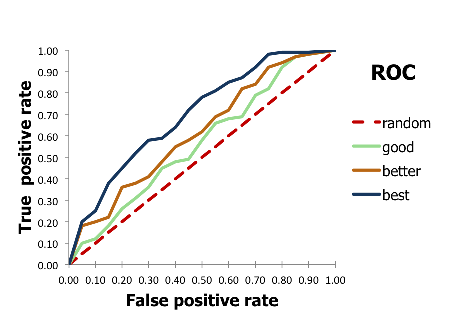

The ROC curve is a graphical representation of the trade-off between sensitivity and specificity. AUC measures the area under the ROC curve and is a useful metric when the goal is to balance sensitivity and specificity.

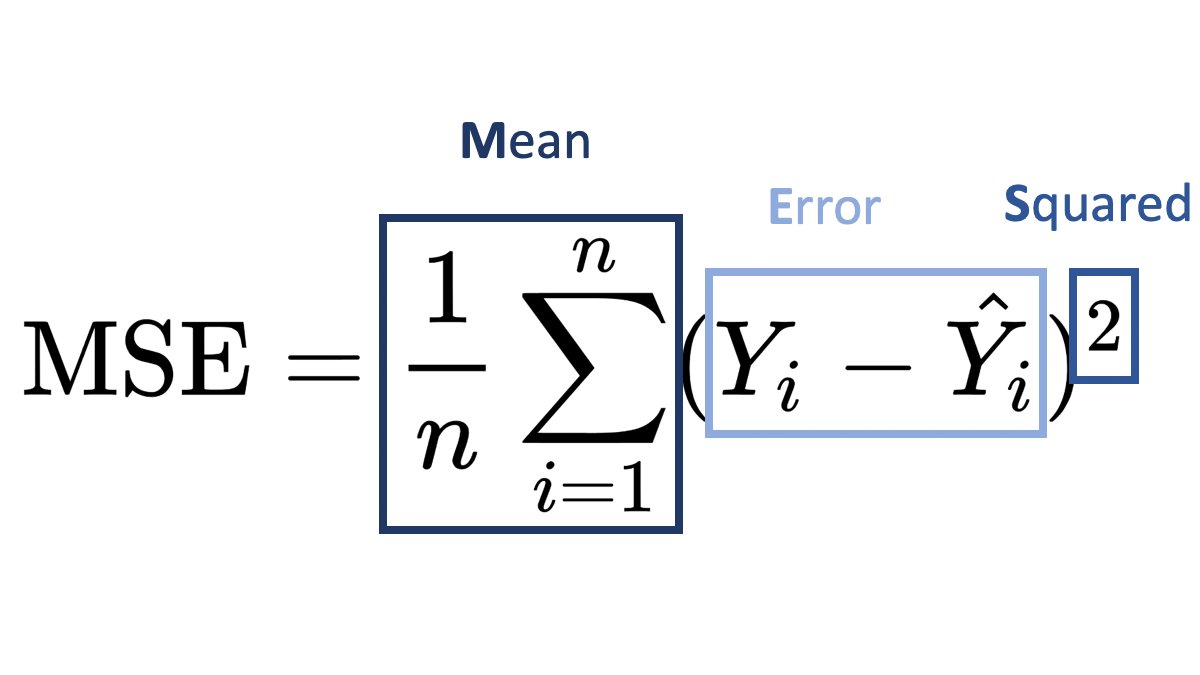

MSE measures the average squared difference between the predicted and actual values. It is a commonly used metric in regression problems.

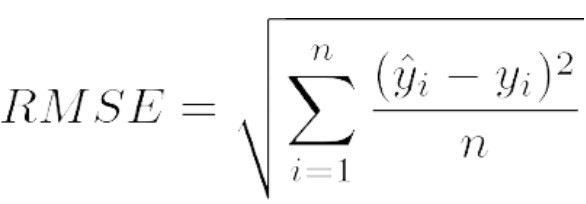

RMSE is the square root of MSE and measures the average difference between the predicted and actual values. It is a useful metric when you want to express the error in the same units as the target variable.

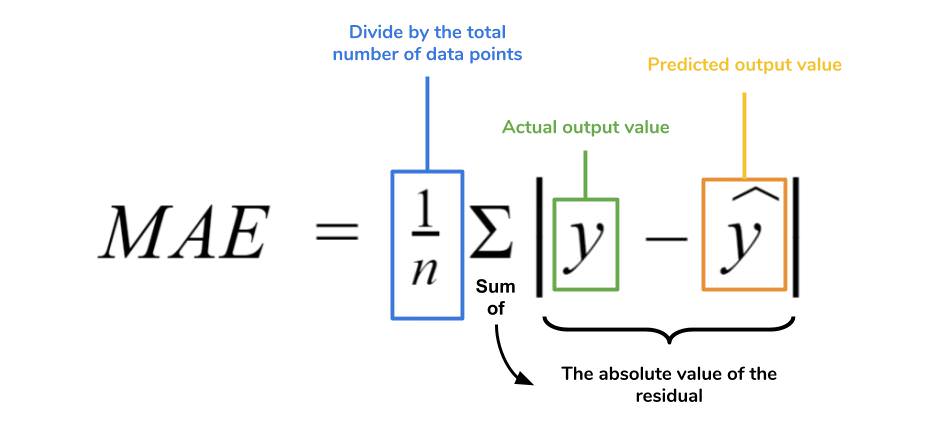

MAE measures the average absolute difference between the predicted and actual values. It is a useful metric when you want to avoid the influence of outliers.